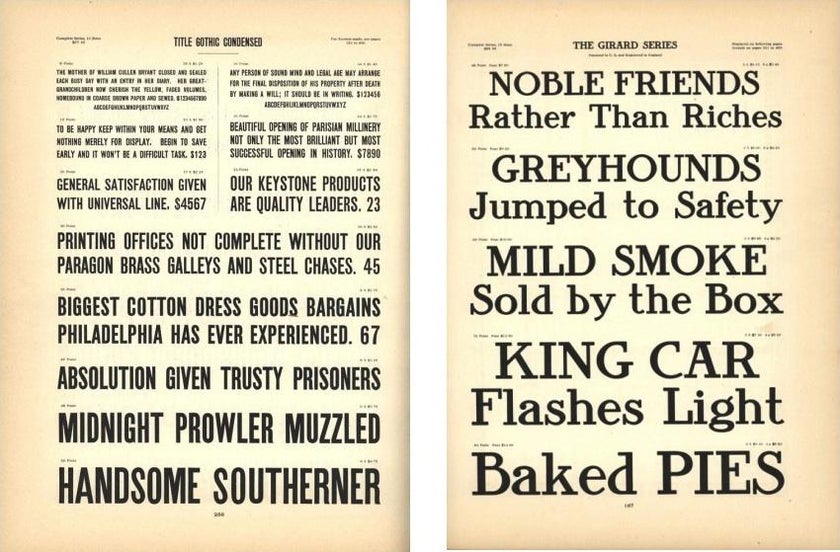

Also at the Semiotic Machines conference a few weeks ago, the US scholar Lisa Gitelman made a very interesting connection between early type specimens – the booklets published by type foundries both to advertise and demonstrate how to compose text with their typefaces (see Reynolds 2022) – and contemporary Large Language Models (LLMs). According to Gitelman, we could think of these specimens as early examples of the training data AI models use to learn how to configure proper pieces of text. While this connection may at first seem merely analogical, perhaps even metaphorical, the truth is that it signals a very interesting and powerful way to problematize contemporary AI models.

Certainly, type foundry specimens were used by print shop workers to guide their work in composing movable type – i.e., typesetting – on so-called composing sticks (see Reynolds 2019). Of course, they didn’t use these specimens to learn how to actually write a word, but rather how to visually process the typesetting variations a given typeface offered, which might include composing with different styles, sizes, glyphs, as well as issues of space within the letters of a word (tracking), or within a particular pair of letters in a word (kerning). In short, the relationship between the typesetter and the specimens involved a craft in the realm of variation, form, space, and background – on the other hand, it was the manuscript that told the typesetter what to compose.

Gitelman’s connection is interesting because it makes clear that the composition of text on the page of a book or newspaper has historically been the product of human labor – a skilled and refined human craft, one might add. In this vein, I asked Gitelman at the conference if she thought that in the midst of this connection between type specimens and LLMs, we could include Shannon’s The Mathematical Theory of Communication (1963 [1949]) to continue to trace this question of labor. She replied that she found this question of labor central, but didn’t see the point of including Shannon’s work in that tracing. Then, with Gitelman’s presentation slides in front of me, showing a beautiful picture of an old type specimen, my mental reference to Shannon’s theory was, of course, also visual. However, faced with the impossibility of presenting the material or rather visual proof of my reference there, I refrained from explaining what I meant.

In chapter one of Shannon’s The Mathematical Theory of Communication, the section entitled “The Series of Approximations to English” presents six paragraphs of text that introduce the use of an “artificial language” to incrementally automate the development of “natural language” – English being the natural language of reference in this case (Shannon 1963 [1949], 42). The 26 letters of the alphabet plus the space are the symbols used for this technique – i.e., 27 symbols in total. The first paragraph shows a sequence in which each letter is weighted with the same probability value (see ibid.). The second paragraph shows a sequence in which each letter is weighted with the value of probability it has in the reference natural language – e.g., the letter E is given the weight .12 (see ibid.). Starting with the third paragraph, n-grams are used. Thus, the third paragraph is developed by using digrams – i.e., 2-grams – which means that “[a]fter a letter is chosen, the next one is chosen in accordance with the frequencies with which the various letters follow the first one” (ibid., 43). The fourth paragraph shows the use of a similar approach, but now with trigrams. With this, say, accumulated knowledge, the fifth and sixth paragraphs are then composed with the probability values assigned to the words instead of the letters – i.e., the probability of one word following another (see ibid., 43–44). As the image below shows, the last paragraph resembles English in a surprising and eerie way.

These were the pages of Shannon’s work that came to my mind when I saw Gitelman’s presentation. As it may be evident now, there is a general visual similarity between these, say, artificial sequences and those published in the early type specimens Gitelman alluded to. But more fundamentally, both cases are series of variations. The specimens represent variations in style and form, and Shannon’s series of approximations represent statistical probabilities of occurrence. Of course, there are also differences between the two. Specimens are the product of skilled human labor, while Shannon’s series are the product of computational automation. And that’s precisely the point here. Even if it sounds a little too obvious, this connection between specimens and statistically generated text is a good reminder that what automation automates is no other thing than human labor. It’s true that, in a strict historical sense, what Shannon’s series automated was the transmission of either radio or telephone signals – which in turn was the, still inaccurate, automation of telegraphy. But even these processes of signal transmission involved human operators. That’s why Gitelman’s connection is so interesting. Because the nature of typesetting reveals the unavoidable material and skillful condition of human labor in this context.

This is important now, because contemporary AI systems are most often seen, or rather branded, as the automation of abstract human skills – e.g., reasoning, learning, or, more generally, intelligence. And while these kinds of competences are certainly at stake in the context of contemporary AI systems, the problem with this characterization is that it obscures issues of pure, material human labor – issues that Gitelman’s analogy brings to the fore.

Just yesterday, Matteo Pasquinelli published in e-flux a response (2024) to the review of his book The Eye of the Master that appeared last month in the journal Critical Inquiry (Kohlbry 2024). According to Pasquinelli, the critical claims made by Kohlbry reveal a central tension among Marxist-leaning thinkers of contemporary technologies – the tension between the labor-form theory (Pasquinelli’s) and the value-form theory (Kohlbry’s?). In other words, whether contemporary technologies such as AI automate material labor or rather more abstract processes such as those encapsulated in the value theory of automation – i.e., that contemporary AI systems are semiotic machines and thus an extreme technological instantiation of the relation between use value and exchange value (see Pasquinelli 2024).

I think that this debate is fundamental today. And while I agree with Pasquinelli that a mix of these two approaches will produce the more comprehensive analyses of AI systems (see ibid.), analogical gestures like Gitelman’s, help us return to aspects that often seem buried under the pile of labels and verbal trends that the AI hype has produced.